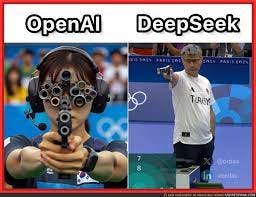

🕵🏻♀️ DeepSeek's secret sauce: Inside the most efficient AI model

The $500 Billion Bet: Understanding AI's Mega-Clusters

Why is DeepSeek so disruptive? Apart from the fact that everyone in AI is talking about DeepSeek, and we are already seeing its impact on the market (Nvidia lost $600Bn) - its AI Assistant, powered by DeepSeek-V3, has overtaken rival ChatGPT to become the top-rated free application available on Apple's App Store in the US.

While each one of us has his/her own theory, it’s best to listen to AI researchers and experts and take a deep dive.

Presenting big ideas from Lex Fridman’s (5 hour long) conversation with Dylan Patel and Nathan Lambert - they are both highly respected, read and and listened to by the experts researchers and engineers in the field of AI.

Dylan runs semi analysis - a well respected research and analysis company that specializes in semiconductors gpus CPUs and AI Hardware in general. Nathan is a research scientist at the Allen Institute for AI and is the author of the amazing blog on AI called interconnects. .

The AI world was recently shaken by the emergence of Deep Seek's models, sparking discussions about open weights, training efficiency, and the implications for the AI landscape. This conversation with Dylan Patel and Nathan Lambert explores Deep Seek's V3 and R1 models, dissecting their architecture, training processes, and potential impact on the industry, from open-source initiatives to geopolitical considerations.

They examine the nuances of open weights, the differences between pre-training and post-training, and the specific innovations that contribute to Deep Seek's impressive performance and cost-effectiveness.

Key Takeaways:

Deep Seek V3 and R1 Explained: Deep Seek V3 is a mixture of experts Transformer language model, an open-weight instruction model similar to ChatGPT. Deep Seek R1, a reasoning model, shares training steps with V3 but undergoes a different post-training process. The distinction lies in their specialized functions: V3 excels at generating human-like text, while R1 focuses on chain-of-thought reasoning, revealing its problem-solving process.

The Significance of Open Weights: Open weights refer to the availability of model weights for download. While Deep Seek's models are open weight, the debate continues on the true meaning of open-source AI. Full open-source includes open data and open code, which are not yet fully available for Deep Seek's models. Open weights empower users with data control, unlike API-based models where data privacy depends on the hosting company.

Pre-training vs. Post-training: Pre-training involves predicting the next token in a vast dataset of internet text to create a base model. Post-training refines this base model through techniques like instruction tuning, preference fine-tuning, and reinforcement learning to achieve desired behaviors, such as helpfulness (V3) or reasoning (R1).

Deep Seek's Efficiency Secrets: Deep Seek achieves cost efficiency through two primary innovations: the mixture of experts (MoE) architecture and MLA (Multi-ad Latent Attention). MoE activates only a subset of the model's parameters for each task, dramatically reducing computation. MLA optimizes memory usage during training and inference.

Mixture of Experts: A Game Changer: The MoE architecture allows for larger models with increased capacity while maintaining computational efficiency. By activating only relevant parts of the model, MoE reduces both training and inference costs, paving the way for more powerful and accessible AI.

The Power of Reasoning Models: Deep Seek R1's reasoning capabilities showcase the potential of AI to not just provide answers but also demonstrate its thought process. This transparency can be invaluable for understanding how AI arrives at its conclusions, fostering trust and enabling deeper insights.

Chain-of-Thought Unveiled: R1's chain-of-thought process reveals the model's step-by-step reasoning, from problem breakdown to solution formulation. This detailed exposition of its cognitive processes sets it apart from models that only provide final answers.

The Role of Data in Model Quality: The quality and processing of training data are paramount to a model's performance. While Deep Seek uses publicly available data sources like Common Crawl, they likely employ proprietary crawlers and data distillation techniques to optimize their models.

The Importance of Training Code: Access to training code is crucial for replicating and further developing AI models. While Deep Seek provides detailed technical reports, full open-source transparency would require sharing the underlying code.

Post-Training Innovations: The field of post-training is rapidly evolving, with new techniques like reinforcement learning from human feedback and preference fine-tuning constantly emerging. These advancements are key to aligning AI models with human values and preferences.

The Transformer Architecture: The Transformer, a foundational component of modern AI models, is based on repeated blocks of attention mechanisms and dense layers. Deep Seek's innovations build upon this architecture to achieve greater efficiency and performance.

Scaling Laws and Model Size: In AI, larger models generally perform better, but efficient training methods are crucial to manage the computational demands. Deep Seek's focus on efficiency allows them to push the boundaries of model size and capabilities.

Core Innovation

Deep Seek achieved breakthrough efficiency in AI model training through innovative architecture design and low-level optimization. Their revolutionary approach to mixture of experts (MoE) models and custom CUDA implementations demonstrated that state-of-the-art AI models could be trained at a fraction of the traditional cost.

Hardware Engineering

"Making a billion parameters feel like a million requires intimate knowledge of how every transistor behaves."

The remarkable efficiency gains came from Deep Seek's mastery of low-level GPU optimization. By scheduling computations at the assembly level and managing memory bandwidth with extreme precision, they achieved performance that seemed impossible with standard approaches.

Compute Infrastructure

The scale of modern AI demands unprecedented computing power. To put the scale in perspective, a single modern AI training cluster consumes more electricity than most cities.

The new mega-clusters being built by tech giants represent a fundamental shift in computing infrastructure, with multi-gigawatt facilities becoming the new standard for AI development.

Infrastructure Innovation

"The power spikes from AI training can literally blow up power plants."

The challenges of running massive AI clusters have driven innovation across every layer of computing infrastructure - from power delivery to cooling systems. Companies have had to develop novel solutions for everything from power grid stability to water circulation.

Model Architecture

Deep Seek's breakthrough came from rethinking how large language models process information. Their sparse mixture of experts approach allows models to selectively activate only relevant parts for each task, dramatically reducing computational requirements while maintaining or improving performance.

Export Controls

"The export controls aren't about stopping China from training models - they're about limiting their ability to deploy AI at scale."

The geopolitical dimensions of AI development have led to complex restrictions on hardware exports. These controls reflect growing recognition of AI's strategic importance.

Cultural Differences

"In Taiwan, when there's an earthquake, TSMC employees just show up at the fab without being called."

The contrast between different approaches to technology development - from work culture to risk tolerance - highlights how regional differences shape innovation patterns.

Future Outlook

The trajectory of AI development suggests continuing rapid progress, with reasoning capabilities becoming increasingly sophisticated. However, the massive infrastructure requirements mean deployment may be constrained by physical limitations rather than algorithmic breakthroughs.

Regulatory Environment

The regulatory landscape for AI development is evolving rapidly, with different regions taking varied approaches. This creates a complex environment where companies must navigate both technical and legal challenges in pursuing AI advancement.

Competitive Dynamics

"The bitter lesson in AI is that simple approaches with massive scale tend to win."

The competitive landscape in AI is shaped by access to compute resources, technical talent, and data, with different players taking varied approaches to these fundamental challenges.

Infrastructure Challenge

The unprecedented power and cooling requirements of AI clusters are pushing the boundaries of infrastructure technology. Innovation in power delivery, cooling systems, and high-speed interconnects has become crucial for continued advancement.

Economic Implications

The economics of AI deployment are rapidly evolving, with costs falling dramatically even as capabilities increase. This creates complex dynamics around business models and deployment strategies.